Programmed Instruction’s Lessons for xMOOC Designers

Lecciones de Instrucción Programada para diseñadores de xMOOC

Julie S. Vargas

B. F. Skinner Foundation

Abstract

Every month, an increasing number of students take university level courses over the internet. These courses, called MOOCs (Massive Open Online Courses), consist of lectures and demonstrations, quizzes and tests, and internet interactions with other students. MOOCs rely on presentation for teaching. But viewing even the most inspirational lecture does not effectively “shape” behavior. Like tutoring, shaping requires centering instruction around student activity, including its moment to moment progress. In the last century, B. F. Skinner designed a shaping procedure called “Programmed Instruction” (PI). Research on PI revealed features of instruction that would help MOOC designers. In particular, the studies on PI recommend adding more active responding. Centering instruction around student activity not only enhances individual achievement, but also provides data to enable designers to improve the effectiveness of their courses.

Keywords: shaping, MOOC, xMOOC, instruction, Programmed Instruction

Resumen

Cada mes un número mayor de estudiantes toma cursos de nivel universitario en internet. Estos cursos llamados MOOCs (cursos en línea masivos y abiertos), consisten de clases, demostraciones, cuestionarios, exámenes e interacción en línea con otros estudiantes. Los MOOCs están basados en la presentación para enseñar. Pero ver incluso la clase más inspiradora no “moldea” efectivamente la conducta. Como en la tutoría, el moldeamiento requiere enfocar la instrucción alrededor de la actividad del estudiante, incluyendo sus progresos momento a momento. En el último siglo B. F. Skinner diseñó un procedimiento de moldeamiento llamado “Instrucción Programada” (PI). La investigación en PI reveló aspectos de la instrucción que pueden ayudar a los diseñadores de MOOCs. Particularmente los estudios sobre PI recomiendan añadir respuestas activas. Centrar la instrucción alrededor de la actividad del estudiante no solo incrementa los logros individuales sino que provee datos que permitan a los diseñadores mejorar la efectividad de sus cursos.

Palabras clave: moldeamiento, MOOC, xMOOC, instrucción, Instrucción Programada

Background

In 2012 the New York Times declared 2012 the year of the MOOC (Pappano, 2012). MOOCs, or Massive Open Online Courses are generally said to originate in 2008 with a course known as CCK08: Connectivism and Connective Knowledge, and a name coined by Dave Cormier (Lowe, 2014, p. x). Twenty-five students at the University of Manitoba in Canada took CCK08 for credit. The course taught by engaging students in blogs and connecting individuals through social media. It also offered readings. The course was opened to the general public without charge or university credit. Over 2200 students joined the free online discussions (Lowe, 2014). This course was not the first online course with large enrollments. Other “open” and free courses had existed on services such as the Kahn Academy founded in 2006, ALISON founded in 2007 to help teach job skills (alison.com/subsection/?section=about) and MIT’s OpenCourseWare that began in 2002 (Lowe, 2014). But it wasn’t until 2012 that the most prestigious American universities began offering MOOCs. In February a Stanford professor left academia to found Udacity (DeSantis, 2012a). In April of that same year, two other Stanford professors launched Coursera, and in May, Harvard and MIT announced a joint venture called edX (DeSantis, 2012b). As of 2014, new MOOC offerings show no sign of slowing down.

The Structure of MOOCs

The university courses differed from the CCK08 “connectivity” course. Instead of asking students to learn through discussions with each other (called a “cMOOC” format), university MOOCs (called xMOOCs) are designed like typical university courses. These xMOOC offerings typically start and end on specific dates, present content through lectures or demonstrations, give exercises, and evaluate with quizzes and tests (Decker, 2014). With thousands of students enrolled, university on-line courses do not offer personal contact with the main instructor. Most provide interaction between students through blogs, email, or other on-line discussion vehicles. Students worldwide can take xMOOC courses without charge, or for a modest fee for a certificate of successful completion. A certification of successful completion from a major university’s xMOOC does not earn the same credit as a course taken as a regularly enrolled student. The rest of this article concerns university courses (xMOOCs).

Teaching Tools in xMOOCs

Students taking internet courses from universities are expected to learn through online presentations that are intended to “prime the attendees for doing the next exercise” (Norvig, 2013). Presentations vary in format. Some consist of video lectures that students view the same way they would if sitting in a university lecture hall. Others present moving arrows or writing that appear as words are spoken. Diagrams or models simulate procedures or show structures of objects as they are assembled or disassembled. Videos of actual experiments or historical footage are also found in the presentations. While these “instructional” parts of courses present content in interesting and informative ways, no responding is required of viewers during the presentations. The learner remains in a nonparticipant role. Showing and telling what to do is not teaching (Vargas, 2012). Designers of xMOOCs realize that presentations alone do not guarantee mastery: hence courses include more active behavior through assignments. Assignments essentially outsource teaching to the students. Whatever they cannot yet do, they must figure out by working through assignments, or by seeking help from peers, but they don’t receive individual guidance by the instructor. In most xMOOCs students can attempt problems multiple times. Mistakes, however, reveal the failure of presentations to teach the skills required. In relying on presentation and practice exercises, xMOOCs have little instructional advantage over the average textbook. Textbooks can be accessed individually according to each student’s preferred time. They provide expert content with diagrams and pictures. They provide exercises, and most give a scoring key to check answers. Using a textbook, students can interact with others when needed. As an instructional method neither books nor presentations can be counted upon to guarantee mastery.

Evaluation Methods in xMOOCs

Following assignments, most xMOOCs give quizzes or tests. Like assignments, quizzes let students assess their performance. Test results, however, do not give students feedback at the point at which it is needed. Finding out that answer number six is incorrect does not tell a student where he or she got off track. For instructors, evaluation is used for grading. The behavior tested becomes the operational definition of a course’s objectives. Even in on-campus courses, the “behavior” or “performance” objectives are worth examining, as a physics professor at Harvard University found out. Eric Mazur taught a traditional on-campus lecture course on physics. He read an article that argued that while physics students could solve problems with formulas, they had no idea of what their answers meant in daily life. He reported his reaction: “Not my students!” (Mazur, 1997, p. 4). But he gave a test to find out. To his alarm, large numbers of students could not select the correct answer to simple questions like identifying which of 5 paths an object would follow if dropped from a moving airplane. Mazur reconsidered his course objectives and redesigned his course to meet them. Although not trained behaviorally, he added more responding and feedback, part of what a behavior analyst would recommend. He divided his lectures into short segments, each followed by a multiple-choice question. Every student answered the question individually and the answers were electronically summarized for Mazur. Then students discussed their answers with peers before Mazur gave feedback on which option was correct and why. Interestingly Mazur reports that not only did his new procedure teach the “understanding” that his original course had lacked, but student performance on the traditional physics exam remained high. That exam required traditional physics problem-solving and computation. With thousands of students enrolled, most xMOOC courses employ only multiplechoice questions with fixed alternatives. The behavior required to pass these tests differs from the objectives of a college education designed to enable students to function in a variety of possible futures. Multiple-choice items do not prepare students for a career or for skills useful in daily life. Conducting an experiment, writing an article, or planning a budget require more complex behavior than selecting the best of four or five given options.

Beyond Multiple-Choice Evaluation

The shortcoming of multiple-choice to evaluate complex skills has not been lost on xMOOC designers. For performances like essay writing, automated scoring programs exist. These programs assign overall grades for essays. Automated essay grading programs have been found to match well the evaluations given by human scorers (Wang & Brown, 2007). They check grammar, length of sentences and paragraphs, vocabulary, and other structural elements. Evaluation of structural elements is helpful especially for non-English writers, but automated graders cannot judge sense from nonsense, accuracy of information, nor quality of argument. An automated grading program gave Lincoln’s Gettysburg Address a grade of 2 out of 6 (National Council of Teachers of English, 2013). As with assignments and quizzes, scoring programs that evaluate finished paragraphs do not help students during the composing of a paper. In addition to scoring programs, xMOOCs may give individualized feedback for complex behavior from other students taking the same course. This feedback quality depends, of course, on the skills of the peers evaluating. Whether with automated scoring or through peer evaluation, xMOOC feedback is not given to students during the building of the skills they are to acquire. xMOOC designers extoll tutoring as the epitome of instruction, but the actual “teaching” part of xMOOCs remains the lecturecum-exercises. Work done in the last century with Programmed Instruction provided a tutoring-like format. Experience gained with Programmed Instruction would be useful to xMOOC designers.

Programmed Instruction

Programmed Instruction came out of the work of B. F. Skinner. Skinner plunged into instructional design in 1953 as a result of a Father’s Day visit to his younger daughter’s school (Skinner, 1983, p. 11; Vargas & Vargas, 1996, p. 237). Sitting on one of the fourth-grade chairs he witnessed a standard lesson. The teacher showed how to solve a simple math computation, then gave out worksheets. Skinner observed some children struggling and others working quickly with looks of resignation or boredom. Suddenly he realized that the teacher had been given an impossible task. No teacher could individually “shape” the performance of each learner in a class of 20 or more students. Shaping, like good tutoring, teaches new behavior by reinforcing the best of an individual’s present behavior, with each next step depending on that learner’s progress. Skinner and his colleagues had spent over 20 years researching how behavior is selected through immediate reinforcement of properties of actions (Ferster & Skinner, 1957/1997; Skinner, 1953). The contingencies of the traditional classroom violated all of the principles he and his colleagues had discovered. Teachers needed help. Skinner designed a machine to solve the teacher’s problem. There were no microcomputers back in the late 1950s, so Skinner made a mechanical machine. His first machines provided practice on randomly presented math problems. Skinner demonstrated his machine at a conference, and when Sidney Pressey heard the talk, he sent Skinner articles about the “teaching machine” he had designed earlier (Skinner, 1983, pp. 69-70). Pressey’s machine had four knobs for answering randomly presented multiple-choice problems. He had found that giving immediate feedback after each answer instead of at the end of the set improved performance (Pressey, 1926). Skinner’s machine, with sliders from 0 to 9, required “composed” responding, not multiplechoice (Skinner, 1983, p. 65). Pressey, equating his machine with Skinner’s, also missed the primary message of Skinner’s talk –that the science of behavior could be used to shape new behavior, not just to give practice on existing, but weak skills. In his talk, Skinner described shaping procedures. He explained how you could shape moving in a figure-eight pattern by reinforcing “successive approximations” of a pigeon’s turn. He talked of maintaining skills with schedules of reinforcement, the effects of which “would traditionally be assigned to the field of motivation” (Skinner, 1968/2003. p. 11). Randomized practice would not do. Instruction had to involve special sequencing he called Programmed Instruction” (PI). Within a couple of years of his Father’s Day visit, Skinner with the help of James G. Holland programmed his own course at Harvard. They wrote sequences that introduced each new concept in a series of “frames.” In size, a frame was about the size of today’s cell phone or tablet screen. Every frame required student responding to the “content” presented at that step. Frames within a unit built, so students solved increasingly complex problems as they progressed. The Programmed Instruction was delivered by a mechanical machine that presented frames one at a time, gave space for writing, and with the movement of a knob, uncovered the correct terms as the student’s writing moved under Plexiglas® where it could not be changed. Students then marked what they wrote as correct or incorrect, and the next frame appeared (Skinner, 1999, pp. 192-197). Everything students wrote, frame by frame, was saved, along with their evaluations of what they wrote. Skinner followed his own scientific principles about shaping. That is, “mistakes” were not defects in student performance, but rather defects in the sequences of instruction. He and Holland revised their program–frame by frame–until the sequences shaped individual responding to the proficiency desired. When no manufacturer in those days before microcomputers would produce a machine meeting Skinner’s criteria, he and Holland put their program into a paper format (Holland & Skinner, 1961). Unlike machine formats, the paper formats did not adhere to the contingencies of a machine: Students could peek at answers before responding. But paper versions at least made the steps available. Book forms of Programmed Instruction exploded in the 1960s. A 1967 Bibliography of Programs and Presentation Devices required 123 pages to list available Programmed Instruction programs and the 116 companies and educational institutions that produced them (Hendershot, 1967). Programs taught entire courses in adult basic education, business, construction, foreign languages, music, science and law (see also, Entelek, 1968). Anyone, it seemed, could write Programmed Instruction. All you needed to do was to take text, remove a few words here and there and voila! No understanding of behavioral principles appeared to be needed. Unfortunately, behavioral expertise was required (Holland, Solomon, Doran, & Frezza, 1976; Vargas & Vargas, 1992). Skinner’s own design drew from his analysis of verbal behavior (Skinner 1957/1992).

Properties that Determine Effectiveness of Instruction

Many formats called “Programmed Instruction” existed (Vargas & Vargas 1992). Most followed Skinner’s format of text with blanks to fill in at each step. Educators evaluated this new teaching procedure. Some studies compared Programmed Instruction with simply reading the same material with no blanks to fill in. Results of these comparisons were contradictory. Holland and other researchers examined programs used in comparison studies to find out why results differed. Properties investigated fell into three major categories: Property one involved antecedent (discriminative) control; that is the specific features of content that controlled responding at each step. Property two considered structural elements that determined attention patterns while viewing individual frames. Property three was density of active responding. Some Programmed Instruction writers added a fourth property–the degree to which the sequencing of frames adjusted to each student’s moment to moment progress. In the last century, the procedures available lacked precision that today’s internet could provide.

Properties of Antecedent Control

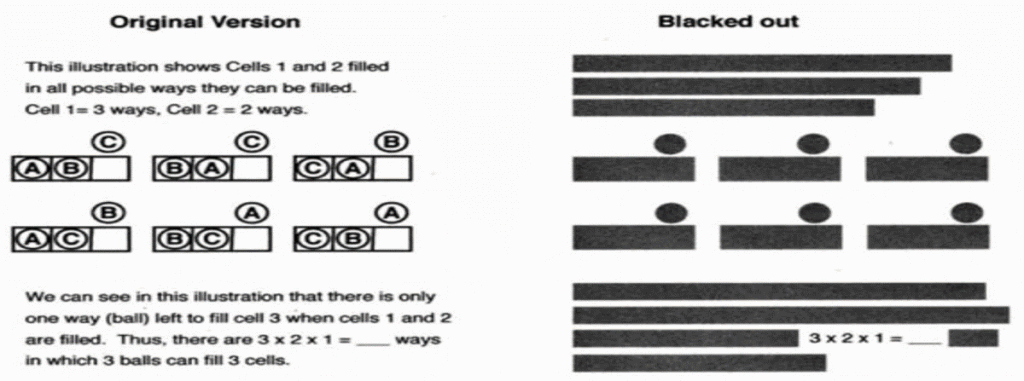

Part of education involves responding to the appropriate features of a problem. Everyone is aware of inappropriate antecedent control when a student cheats. In cheating, a student supplies an answer scored as “correct” or a paper graded as “A”, but what controlled the student’s behavior is not what the assignment intended the student to do. Copying is under different antecedent control from solving or analyzing. In any course, instructors need to attend to antecedent control. Even if students are answering “correctly”, are they doing so for the right reasons? To examine the features of Programmed Instruction that controlled student responding Holland invented the “blackout ratio” (Holland, 1967; Holland & Kemp, 1965). The premise was simple: If you could respond “correctly” with parts of “instruction” blacked out, the obscured material was not controlling your answer. The more material that was blacked out, in other words, the less that content contributed to learning. Figure 1 shows an example of a high blackout ratio: Anyone could answer this question without reading a word of the section intended to teach probability. In contrast, a low blackout ratio indicates that most of the “instructional” material exerts discriminative control over responding. In looking at Programmed Instruction lessons that were no better than or equivalent to just reading the same material, Kemp and Holland (1966) found that those Programmed Instruction lessons had high blackout ratios. Like the statistics program in Figure 1, student responding failed to be controlled by the relevant properties of the topic (Kemp & Holland, 1966). Students could respond “correctly” for the wrong reasons. Consider the talk of a lecturer as equivalent to the textual explanation in Figure 1, and you can imagine how difficult it would be for xMOOC designers to identify features of their explanations that exert discriminative control over student performance.

Structural Elements that Determine Patterns of Attention

Part of antecedent control involves patterns of attention. In an online course, where do students look during the presentation of a lecturer? Do their eyes follow the diagram arrows that illustrate a point, are do they focus on the lecturer’s face, or worse on their cell phones? When a screen presents textual material, what part do students view first? The latter question was possible to investigate with Programmed Instruction. Doran and Holland (1971) tracked the eye movements of students as they went through two versions of Programmed Instruction. The first, a low blackout ver-

Figure 1. Frame in original presentation (left) and in the blackout version (right).

Courtesy of the Society for the ExperimentalAnalysis of Behavior.

sion, consisted of material taken from the program used in Skinner’s course. Students had to read most of the words in order to correctly answer. The second version was constructed as a high blackout version. The same sentences were presented, but different words were requested. To make it unnecessary to read all the words, the high blackout version omitted words that could be filled in by reading only adjacent words. For example “reinforcement” was easy to fill into a blank following “positive” even without reading anything else. The researchers controlled for unit difficulty: Half of the students began the first unit with a low blackout version then received the high blackout version for the subsequent unit. The other half of the students began with the high blackout version then received the low blackout version for their next unit. Results showed clear differences between eye tracking with high and low blackout ratio material. With the high blackout version, the one with poor discriminative control, student eye movements flickered all over the screen. They didn’t read everything. They skipped around. With the low blackout ratio, where control lay in the entire material, student eye movement showed the typical pattern of reading. (Doran & Holland, 1971). This principle is still extant. A recent study of eye-tracking when viewing home pages showed a similar flicking around the page before redesign. The home page was changed to control focusing onto critical parts. Where commercial interests are concerned, home pages typically “go through many iterations, repeatedly fine-tuning certain elements of the page in order to control the user’s eye movement patterns, creating a natural user experience that avoids user confusion and frustration” (Rowell, 2010). Similar fine-grained analyses are, to my knowledge, not conducted with xMOOCs. No one tracks where students are looking while viewing presentations.

Density of Active Responding

In the 1990s, with the coming of microcomputers, instructional designers adopted computer-delivered formats for Programmed Instruction. Computer formats enabled research on the role of active responding. Using a computer-delivered Programmed Instruction lesson, Kritch and Bostow (1998) varied “student response density.” They created three versions of a lesson to teach how to write code using a computer authoring language. One version required responding to content in every screen of material. A second version asked for responding in only in half of the screens. A third version presented material to read with no responding. To control for time spent with each screen, each student taking the “no active responding” version was yoked to one student taking the highest density version. The “no active responding” student thus received his or her next screen only when the yoked “high density” student filled in an answer and moved on. Thus, whether actively responding or not, both students saw each screen of content for an equal amount of time. Not surprisingly, the more actively students responded during instruction the better they performed. Not only did they score better on the unit posttest, they also wrote better code when given a totally new computer authoring task.

Adjusting Sequences during Shaping

Shaping requires that teaching actions continually adjust to learner behavior just as much as learner actions adjust to instructional steps. Skinner’s teaching machine adjusted to missed items by bringing them back until they were completed correctly, a poor way to help the slower learner. A format called “gating” provided more adaptation to individual progress by beginning each sequence with a quiz item. If missed, the student completed items to teach that skill. If answered correctly, the next test frame appeared. Gating helped alleviate the need for repeating missed items. But even the best Programmed Instruction of the last century did not continually adjust to each action taken by the learner the way a good tutor would. Flexibility in sequencing for each individual could, however, be done with today’s internet tools.

Lessons from Programmed Instruction

Any instructional designer could benefit from behavior analytic principles (Vargas, 2013, Part III). First, behavioral instructors state goals in terms of student behavior. Objectives like “understanding” are translated into observable actions that indicate when the inferred “understanding” has been achieved. These “behavioral objectives” define skills students should have mastered by their final evaluation. Next, shaping steps, that is the actions students take to achieve competence, are analyzed. As students respond, instructions adjusts to individual progress. All this requires high rates of student responding. The need to increase active student participation has not been lost on xMOOC designers. Norvig (2013) describes a course with lectures divided into two- to sixminute segments with student responding in between. Science courses have added more responding by adding simulated labs. Students “click”, “drag”, and type on a screen to virtually “perform” experiments, or even to operate actual equipment located elsewhere (Waldrop, 2013). Many internet courses prescribe interacting with fellow students to increase activity. Without seeing what peers are saying, however, instructional designers cannot tell what or if peer interactions help teach. All of these procedures to increase active student involvement are gradually approximating Programmed Instruction’s procedures. With more frequent data, like that gathered after each two- to six-minute lectures, xMOOC designers can locate “junctures” where course materials need improving (Norvig, 2013). The data from Programmed Instruction would provide even more details. xMOOC courses require a team that includes a content expert, a behavior analyst, and a data-analysis expert (Vargas, 2004). Currently instructional teams use only part of the data that could be available. They record average sign-on and drop-out rates, minutes spent on-line, educational level and nationality of enrollees, and opinions of students. These data serve marketing purposes more than improvement of instruction. One summarizing website gives data on over 150 courses, isolating completion rates by length of course (number of weeks), number of students enrolled, platform (Coursera, EdX, Futurelearn, Open2study, Udemy, and 11 others), and type of assessment (peer grading or auto grading only) (www.katyjordan.com/MOOCproject.html). The data show higher completion rates for shorter courses and for courses with fewer students. These data, while interesting, do not help improve instruction. Cohort analyses of student gains measured in pre- to posttest along with number of attempts on homework or quizzes reveal much about the kinds of students who take a course, but little about what students were doing to learn (Colvin et al., 2014). The designer cannot tell where critical steps were missing, what steps are unnecessary, or which parts were particularly effective. Average summary data also fail to capture individual variability. They do not adjust to progress during the acquisition of skills, something with which a behavior analyst could help. If instruction were broken down into Programmed Instruction-sized steps, today’s computers could adjust steps as a learner goes through them. xMOOCs could record the speed with which each step is correctly completed. Rapid performance could signal need to a shift to more difficult steps or to a subsequent section. Boredom from repeating skills already mastered could be avoided. Conversely, slow responding could transfer a learner to a sequence of missing prerequisites or smaller steps until the learner responds more rapidly. Sequence alterations of both kinds would be seamless to the learner. He or she would not know whether the sequences taken were advanced or remedial. Such shaping would come close to tutoring by a live teacher.

Conclusion

The xMOOCs offered by today’s universities rely on lectures and demonstrations as their primary teaching tool. Presentations put students in a passive role. Behavioral research on Programmed Instruction in the last century revealed the importance of active student responding and of subtle features of formatting that determine momentto-moment student progress. One cannot expect xMOOC designers to abandon lectures and presentations in favor of writing the complex sequencing of steps that Programmed Instruction requires for a college-level course. But they would benefit from what behavioral science has to offer. Shaping student competence requires a lesson to adapt each learning step to the variability in individual student actions. Increasing individual responding also provides detailed information for improving lesson content and sequencing. That information, in turn, encourages more analysis of student responding, and perhaps a realization that behavioral expertise is needed. We have a science that shows how behavioral change occurs. It is time to use it.

References

- Colvin, K. F., Champaign, J., Liu, A., Zhou, Q., Fredericks, C., & Pritchard, D. E. (2014). Learning in an introductory physics MOOC: All cohorts learn equally, including an on-campus class. International Review of Research in Open and Distance Learning, 15, 4.

- Decker, G. L. (2014) MOOCology 1.0. In S. D. Krause & C. Lowe (Eds.), Invasion of the MOOCs: The Promise and the Perils of Massive Open Online Education. Anderson, SC: Parlor Press.

- DeSantis, N. (2012a, January 23). Stanford professor gives up teaching position. Hopes to reach 500,000 students at on-line start-up. The Chronicle of Higher Education. Retrieved November 17, 2014 from http://chronicle.com/blogs/wiredcampus/stanford-professor-gives-up-teaching-position-hopes-to-reach-500000-students-at-online-start-up/35135. DeSantis, N. (2012b, May 2). Harvard and MIT put $60-million into new platform for free online courses. The Chronicle of Higher Education. Retrieved November 17, 2014 from http://chronicle.com/blogs/wiredcampus/harvard-and-mit-put-60-million-into-new-platform-for-free-online-courses/36284

- Doran, J., & Holland, J. G. (1971). Eye movements as a function of the response contingencies measured by blackout technique. Journal of Applied Behavior Analysis, 4, 11-17.

- Entelek, Inc. (1968). Programmed Instruction Guide. Newburyport, MA: Author.

- Ferster, C. B. & Skinner, B. F. (1997) Schedules of Reinforcement. Cambridge, MA: B. F. Skinner Foundation. (Original work published in 1957).

- Hendershot, C. D. (1967). A Bibliography of Programs and Presentation Devices. Bay City, MI: Carl H. Hendershot Ed.D.

- Holland, J. G. (1967). A quantitative measure for programmed instruction. American Educational Research Journal, 4, 87-101.

- Holland, J. G., & Kemp, F. D. (1965). A measure of programming in teaching machine material. Journal of Educational Psychology, 56, 264-269.

- Holland, J. G., & Skinner, B. F. (1961). The Analysis of Behavior. New York, NY: McGraw-Hill.

- Holland, J. G., Solomon, C., Doran, J., & Frezza, D. A. (1976). The Analysis of Behavior in Planning Instruction. Reading, MA: Addison-Wesley Publishing Co.

- Kemp, F. D. & Holland J. G. (1966). Blackout ratio and overt responses in programmed instruction: a resolution of disparate results. Journal of Educational Psychology, 57, 109-114.

- Kritch, K. M., & Bostow, D. E. (1998). Degree of constructed-response interaction in computer-based programmed instruction. Journal of Applied Behavior Analysis, 31, 387-398.

- Lowe, C. (2014) Introduction: Building on the tradition of CCK08. In S. D. Krause & C. Lowe (Eds.), Invasion of the MOOCs: The Promise and the Perils of Massive Open Online Education. Anderson, SC: Parlor Press.

- Mazur, E. (1997). Peer Instruction: A User’s Manual. Upper Saddle River, NJ: Prentice Hall, Simon & Schuster. National Council of Teachers of English. (2013, April). Machine scoring in the assessment of writing. Retrieved July 24, 2014 from http://www.ncte.org/press/issues/ robograding2

- Norvig, P. (2013, August). Massively personal: How thousands of on line students can get the effect of one-on-one tutoring. Scientific American, 309, 55. doi:10.1038/ scientificamerican0813-55

- Pappano, L. (2012, November 2). The year of the MOOC. The New York Times. Retrieved November 17, 2014 from http://www.nytimes.com/2012/11/04/education/ edlife/massive-open-online-courses-are-multiplying-at-a-rapid-pace.html

- Pressey, S. L. (1926). A simple apparatus which gives tests and scores – and teaches. School and Society, 23, 373-376.

- Rowell, E. (2010, November 5). Eye movement patterns in web design [Web log post]. Retrieved July 23, 2014 from http://www.onextrapixel.com/2010/11/05/eye-movement-patterns-in-web-design/

- Skinner, B. F. (1953). Science and Human Behavior. New York, NY: The Macmillan Company.

- Skinner, B. F. (1992). Verbal Behavior. Cambridge, MA: The B. F. Skinner Foundation. (Original work published in 1957).

- Skinner, B. F. (1999). Cumulative Record: Definitive Edition. Cambridge, MA: The B. F. Skinner Foundation. (First edition published in 1959).

- Skinner, B. F. (2003). The science of learning and the art of teaching. In The Technology of Teaching. Cambridge, MA: The B F. Skinner Foundation. (Original work published in 1968).

- Skinner, B. F. (1983). A Matter of Consequences. New York, NY: Knopf. Vargas, E. A. (2004). The triad of science foundations, instructional technology, and organizational structure. The Spanish Journal of Psychology, 7, 141-152.

- Vargas, E. A. (2012). Delivering instructional content–at any distance–is not teaching. Psychologia Latina, 3, 45-52.

- Vargas, J. S. (2013). Behavior Analysis for Effective Teaching (2nd Ed.). New York: Routledge, Taylor & Francis.

- Vargas, E. A., & Vargas, J. S. (1992). Programmed instruction and teaching machines. In R. P. West & L. A. Hammerlynck (Eds.), Designs for excellency in education: The legacy of B. F. Skinner. (pp. 34-69). Longmont, CO: Sopris West, Inc.

- Vargas, E. A., & Vargas, J. S. (1996). B. F. Skinner and the origins of Programmed Instruction. In L. D. Smith & W. R. Woodward (Eds.), B. F. Skinner and Behaviorism in American Culture (pp. 237-253). London: Associated University Presses.

- Waldrop, M. M. (2013). The virtual lab. Nature, 499, 268-270.

- Wang, J., & Brown, M.S. (2007). Automated essay scoring versus human scoring: A comparative study. Journal of Technology, Learning, and Assessment, 6 (2). Retrieved July 30, 2014 from http://ejournals.bc.edu/ojs/index.php/jtla/article/ view/1632/1476

Inicia la discusión (0)

Parece que no hay comentarios en esta entrada. ¿Porqué no agregas uno e inicias la discusión?

Trackbacks y Pingbacks (0)

Abajo hay un recuento de los trackbacks y pingbacks relacionados con este artículo. Estos se refieren a los sitios que hacen mención o referencia de esta entrada.