Learning Science Design and Development Requirements1: An update of Hendrix and Tiemann’s “Designs for Designers”

Diseño basado en la ciencia del aprendizaje y requisitos de desarrollo: Una actualización de “Diseño para diseñadores” de Hendrix y Tienmann

T. V. Joe Layng

Change Partner

Abstract

As new “technologies of tools” emerge for the delivery of instruction, it is of critical importance that we apply “technologies of process,” derived from the basic and applied learning sciences, to the design and development of instruction (Layng & Twyman, 2013). Those who would design effective instruction must master a range of skills, competencies and objectives that have been derived from over forty years of research and application. Mastery is not easy and requires significant training. This article describes in detail these requirements. They are divided into six separate domains: analysis, design, media selection, testing (formative evaluation), system management, and research evaluation. The requirements listed here provide a guide for anyone looking to become an effective instructional designer capable of making use of current and emerging software and hardware technologies.

Keywords: learning science, matrix, instructional design, instruction

Resumen

Conforme emergen nuevas “tecnologías de las herramientas” para llevar a cabo instrucción, es de importancia crítica que apliquemos “la tecnología del proceso”, derivada de la ciencia del aprendizaje tanto básica como aplicada, para el diseño y desarrollo de la instrucción (Layng & Twyman, 2013). Aquellos que quieran diseñar instrucción efectiva deben dominar un rango de habilidades, competencias y objetivos que se han generado después de cuarenta años de investigación y aplicación. El dominio no es fácil y requiere entrenamiento significativo. Este artículo describe en detalle estos requisitos. Se dividen en seis dominios separados: análisis, diseño, selección de medios, pruebas (evaluación formativa), manejo de sistemas y evaluación de la investigación. Los requisitos enlistados proveen una guía para cualquiera que busque convertirse en un diseñador instruccional efectivo y capaz de hacer uso de tecnologías de software y hardware que están emergiendo actualmente.

Palabras clave: ciencia del aprendizaje, matriz, diseño instruccional, instrucción

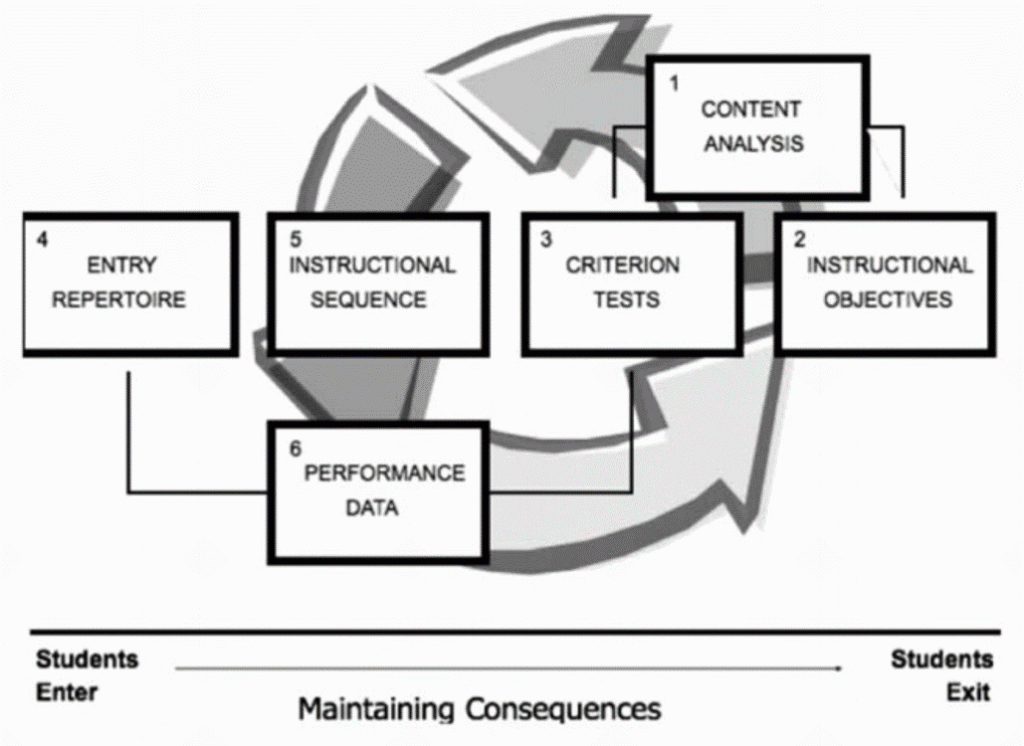

In 1971 Hendrix and Tiemann wrote, “A new professional is needed to assist in the complex process of developing quality instruction. … [this individual] will be ‘a versatile and highly trained instructional designer.” To meet this goal a group from the Upper Midwest Regional (educational) Laboratory (USA) painstakingly set out what instructional developers needed to do if high quality instruction were to be developed. That effort was based on the extensive work done in the learning laboratory and in the application of learning theory to educational problems. Hendrix and Tiemann produced a series of objectives originating in that report which provided a guide for anyone looking to master the competencies required to become an effective instructional developer. Over the years, their objectives have remained remarkably descriptive of what it takes to design high quality instruction. With some additions and modifications, much of what they described is entirely germane to the design of quality learning environments of all types across a vast range of delivery systems and technologies. Accordingly, much of what they specified is included here with some editing, updating, and expanding upon their original work. In addition, some entirely new items have been incorporated into the requirements. It is a testament to the work done by the investigators of that day that their work remains, and will continue to be, relevant now, and into the future. Today, however, instructional design is an eclectic field that finds many of its practitioners working in the corporate environment. As such, the instructional design field, for the most part, has moved away from its learning science and education focus to become more expedient in its applications, thus requiring a somewhat different, though overlapping, set of skills (see Koszalka, Russ-Eft, & Reiser, 2013, for a good overview of the competencies for instructional designers of this type). In contrast, the requirements described here find their roots in the scientific study of learnng and behavior, and are reflective of basic and applied research as well as application. New technologies are continually being developed that are providing new opportunities for practitioners who have met the requirements to make full use of them. The requirements described here are an attempt to make explicit what is required, and serve as a guide for those who wish to contribute to the science and technology of the instructional/learning process and its application (see Layng & Twyman, 2013, for a discussion on the distinction between the technology of tools and the technology of process in teaching and learning). The following learning science design and development requirements are divided into six categories, an addition of one from the original five. Further, more recent work on learning hierarchies and generative instruction have been added. These are terminal requirements—each requirement would have a considerable list of “enabling objectives” that would lead to mastery. The goal is to provide a list of requirements necessary for a new generation of educational entrepreneurs to achieve that will allow them to make full use of the new technology of educational tools. The entire design process has been described elsewhere (see Leon, Layng, & Sota, 2011; Twyman, Layng, Stikeleather, & Hobbins, 2004), but in brief, it begins with the analysis of content and the formulation of objectives, followed by the construction of criterion evaluation, the identification of the entry repertoire, the design of instruction, the use of performance data during developmental testing to revise the design, the creation and use of maintaining consequences to ensure student engagement, and the application of a range of planning and management skills to ensure optimal development. This process is represented in Figure 1. The requirements listed do not follow the design sequence since many of them are used in the various phases of development. In addition, unlike the design process, the competency categories listed need not be acquired in any specific order.

Analysis

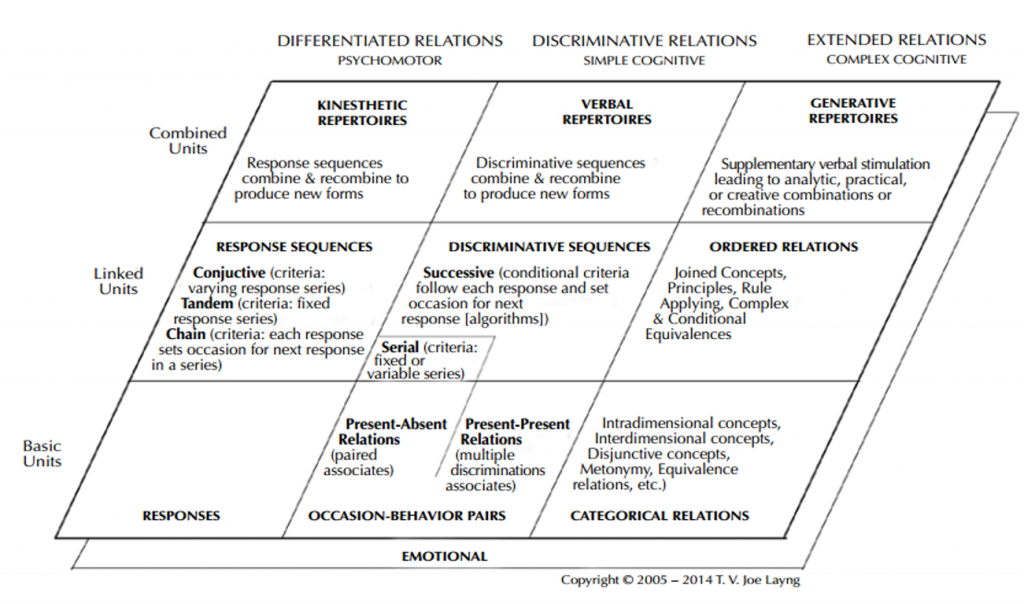

The design of instruction or learning environments begins with analysis. Designers must have a clear idea of what the accomplishments of learners will be when they complete the instruction or other learning activities designed to teach. This begins with what is called a content analysis and culminates in objectives based upon that analysis. Often, these two processes interact: sometimes we have a goal or objective we would like to achieve and other times we must understand the subject matter to formulate an objective. Classifications of skills based upon types of learning have evolved over the past few decades to help with that task. The one used as a reference here is a modification by Layng (2007; see also Sota, Leon, & Layng, 2011) of a classification scheme developed by Philip Tiemann and Susan Markle (1991). A more detailed description can be found therein, but a brief description here may be helpful. In this classification

Figure 1. A diagram adapted from Markle and Tiemann (1967) representing the steps of the design process.

scheme, three main types of learning are represented by columns in a matrix: Differentiated Relations (Psychomotor), Discriminative Relations (Simple Cognitive), and Extended Relations (Complex Cognitive). Three types of behavioral units are represented as rows in the matrix: Basic Units, Linked Units, and Combined Units. The intersection of the cells provide a description of a type of learning. A simplified version of the matrix is shown in Figure 2. In the first column, the emphasis is on the topography and fluent performance of the response or response sequence. Discrimination is often important to the successful completion of a sequence or in the production of complex response combinations; however, the emphasis is on the precision of which the response can be performed (e.g., ice skating, gymnastics, etc.). In the second column, the opposite holds. The emphasis here is on the discrimination. The response is typically considered to be in the student’s repertoire, and the focus is on under what conditions the response will be performed. The relations here range from simple occasion–behavior relations (paired associates) to successive conditional discriminations (algorithms) to verbal repertoires1 that are a product of often complex combinations and recombinations of response sequences. One defining characteristic of this column is that the occasion–behavior topographies taught are the same as those that are tested. The third column describes extended relations, which means that the specific occasion–behavior topographies taught are not the ones tested. In essence, the extension to new conditions is required. For intradimensional concepts, the critical features guiding responding are found within a single stimulus or event. This means, for example, that a new example of a single chair not provided in instruction would be correctly identified as a chair while a new nonexample (i.e., a love seat) would be excluded. For interdimensional concepts, the critical attributes that define the relation between at least two events (stimuli) would be learned and applied to new situations not involving the original stimuli (e.g.,things that are opposite, distant, wider, larger, smaller, or that require a coordinate concept to be taught such as me, you, believe, know, etc.). Principles similarly are applied to new situations. Principles describe the relation between concepts and the range of application is limited by the smallest range of extension of a component concept (see Tiemann & Markle, 1991). Principles also involve “when” aspects that set the conditions under which the principle holds. The generative repertoires are those that result in producing behavior that meets requirements (typically encountered in problem solving) not already in the student’s repertoire or that are not a “simple” extension of a previously taught relation. These repertoires typical are categorized as reasoning, inquiry, analytical thinking, and more advanced problem solving (see for example Robbins, 2011). For the designer, analysis of content described by these types of learning are essential to successfully building learning environments and to adequately describing

objectives. Objectives comprise four qualities; they describe the context (including tools to be used), the domain (such as the range of examples and nonexamples used in concept testing), the behavior, and the performance standard. Without a thorough content analysis it is nearly impossible to write worthwhile objectives and lack of adequate analyses often results in limited or vague objectives. A popular taxonomy is Benjamin Bloom’s analysis of learning domains (Bloom, Engelhart, Furst, Hill, & Krathwohl, 1956). Bloom’s taxonomy is not an analysis of content, but a representation of general behaviors that comprise a range of skills. Even if objectives are to be stated in accord with Bloom, a content/task analysis is still required. This becomes clearer when we consider where Bloom’s learning types fall within the matrix used here. We find the first level, Knowledge, falling into the simple cognitive column and primarily into the basic units cell and some of the linked units cell. Depending on the precise performance requirement, different design considerations may be required for two different performances both of which might be consider “knowledge.” The same is true for the other levels as well: Comprehension, verbal repertoire and discriminative sequences; Application, categorical relations, and some ordered relations; Analysis, the entire discriminative relations column combined with elements from the basic and linked units in the extended relations category; Synthesis, elements from all three columns; Evaluation, similarly involves all three columns, however, here there is particular emphasis on interdimensional concepts. It is the criteria which are identified as what is being compared varies. One sees that the increasing difficulty found in Bloom’s taxonomy comes from the increasing combination of different components necessary to perform as required. Recent revisions (Krathwohl, 2002) have changed some of the learning level labels, order, and qualities, but all “Bloom” tasks, old or more recent, must be analyzed to find the precise relations required. The section below describes the analysis requirements for effective instructional or learning environment design.

I. Analysis Requirements

- Given a relatively specific description of what learners are expected to do, perform a deficiency analysis which determines a. whether a performance deficiency exists and, if so, b. whether instructional or behavioral management problems, or both, need to be resolved.

- When specific tasks must be learned, perform a task analysis and specify terminal objectives for the instruction.

- When given a general educational goal, or the results from a task analysis, use a learning types hierarchy to analyze the content to be taught/learned in terms of differentiated (psychomotor), discriminative (simple cognitive), and extended (complex cognitive) relations.

- Given a differentiated (psychomotor) performance to be learned, perform acontent analysis to determine if response learning, chain or other sequence learning, or kinesthetic repertoires are required.

- Given a discriminative (simple cognitive) performance to be learned, perform a content analysis to determine if occasion – behavior relations (paired associates), symmetric occasion – behavior relations (two-way paired associates), multiple occasion – behavior relations (multiple discriminations), conditional occasion – behavior relations, serial criterion sequences, successive criterion sequences, conditional criterion sequences, or verbal repertoires are required.

- Given an extended (complex cognitive) performance to be learned, perform a content analysis to determine if intra-dimensional concept learning, interdimensional concept learning, disjunctive concept learning, metonymical occasion – occasion relations, stimulus equivalence relations, ordered relations (joined concepts, principles, rules, conditional equivalences, etc.), or generative repertoires are required.

- Given a production task (essay writing, robot building, etc.), analyze it into its components, and submit the components to a content analysis.

- Given a loosely stated educational goal, probe the stater’s behavior to obtain further specificity. (The stater could be oneself but not necessarily.)

- Discriminate between objectives stated in specific behavioral form and objectives which are not so stated.

- Write objectives that include a statement of context (the tools or environment), domain (the pool from which examples will be drawn), the behavior required, and the performance standard to be met.

- Given generally stated objectives, further define these in whatever detail necessary for statement in specific behavioral terms.

- Given a behaviorally-stated objective, select those parts of it requiring further analysis techniques, e.g., illustrate the range (or generative characteristics)

- Given a set of terminal objectives, select applicable learning strategies as determined by the content analysis.

- Given an existing instructional module, state the terminal objectives implicit in the module.

- Given an existing test for an instructional module, state the terminal objectives implicit in the test covering the module.

- Given an existing instructional module, specify the minimum prerequisite behaviors required of learners.

- Given a specific behavioral objective and associated content for sample prerequisite skills, discriminate between essential and nonessential prerequisite skills (those that are likely to be absent versus those are necessary, but likely to be in the student’s repertoire).

- Given a specific behavioral objective and associated content, specify in terms of behavior and content all prerequisite skills required of the learners.

- Given the elements of a decision system with all dependencies specified, prepare a PERT-type (decision tree, algorithm) analysis.

- Employ various heuristics for determining the “worth” (value) of a given set of potential course objectives.

- Employ various other heuristics to determine the attainability (feasibility) of a given set of potential course objectives.

- Identify the responses, response sequence or kinesthetic repertoires contained in a given course or unit-sized chunk of conceptual subject matter.

- Identify the occasion–behavior relations, multiple discriminations, discriminative sequences or verbal repertoires contained in a given course or unit-sized chunk of conceptual subject matter.

- Identify the concepts, intradimentional or interdimensional, contained in a given course or unit-sized chunk of conceptual subject matter.

- When prospective learners must “understand” a stated concept, perform a concept analysis and specify terminal behavioral objectives, the achievement of which will satisfy subject matter experts.

- In conjunction with a subject matter expert (or group thereof), determine the critical attributes of each concept identified, the varying attributes of typical examples, and a set of close-in nonexamples of and each concept.

- On the basis of the analysis of each concept, construct a conceptual hierarchy relating each concept to the others in terms of superordinate, coordinate, and subordinate relationships.

- Given a set of superordinate and subordinate concepts, describe the superordinate attributes that are inherited by subordinate concepts and distinguish between critical and defining properties of the subordinate concept (polygon: 2-D, closed figure, straight lines; triangle: polygon with 3 sides –inherits all critical attributes of superordinate polygon, with 3 sides the defining subordinate attribute).

- Identify the behaviors (usually written or spoken) that may be linked in a two-way (symmetric) association with concept examples (seeing an example or wider— saying wider; hearing wider—selecting wider example; saying wider— having someone hand you the wider of two objects).

- Identify stimuli that share no features, but guide the same response (stopping at red light, stop sign, policeman with raised hand; place on a table, on a book, on a car).

- Identify and analyze any joined concepts contained in a given course or unitsized chunk of complex subject matter (two or more concepts act together, e.g., small car).

- Identify the principles contained in a given course or unit-sized chunk of complex subject matter.

- Determine the critical concepts embedded in the principle, and the relation between the concepts, often stated in terms of “if, then” relations.

- Determine the generative procedures required to provide students with any inquiry skills required.

- Identify components that can be combined into new composite repertoires.

- Determine if the application of specific reasoning training (prescribed supplementary verbal stimulation that restricts response alternatives in accord with problem requirements) is appropriate.

- Determine if students need to acquire component repertoires “on their own” as a component not provided as part of instruction to be combined with those provided.

- Identify any repertoires required to interact with the technology requirements of the teaching/learning environment (computers, tablets, simulation equipment, etc.).

- Given specific behavioral objectives, determine appropriate practice strategies for the objective.

- Discriminate between repetitive and deliberate practice.

- Discriminate between spaced versus massed practice.

- Discriminate between accurate performance and fluent performance.

- Given a particular performance to be learned, determine the frequency of performance that would reasonably indicate the performance is fluent.

- Given an instructional sequence, determine the points in the sequence where there will be sufficient practice to ensure fluency.

- Given an instructional objective and a set of reinforcers, discriminate between the appropriate and inappropriate reinforcers, based on the characteristics of a population of learners.

- Given an instructional objective, specify effective and/or efficient reinforcers, based on the relevant characteristics of a population of learners.

- Discriminate between program–extrinsic and program–specific reinforcers, and determine which one or what mix of each may be used in the sequence.

Design

Designing instruction or creating learning environments involves the sequencing of activities that take a student from their entry repertoire to the repertoire described in the objectives. A range of sequences, problems, projects, inquiries, and discoveries can be designed to accomplish this outcome (see Johnson & Layng, 1992; Markle, 1990). The section below describes the design requirements for effective instructional or learning environment design.

II. Design Requirements

- With access to a set of the typical students, devise measures of the familiarity of points in the content analysis hierarchy, for example, by testing recognition of examples and nonexamples of each concept. (It is not the case that low level concepts are necessarily learned before high level ones, despite the “logic” of such a statement.)

- Based on the results of the empirical data coordinated with a logical analysis, “logically” sequence instruction on unknown relations (e.g., concepts) by proceeding from familiar to unfamiliar. Some of these sequences will involve training of discriminating subclasses within a known larger class (e.g., will classes involve of “insects”). Other sequences will involve training in extending to a broader class made up of known subclasses (e.g., class “herbivores” made up from cows and deer).

- For composite repertoires, ensure each component is acquired prior to asking for the composite performance.

- Based on the logical analysis, construct an appropriate relational (e.g., concept) learning criterion consisting of new examples and nonexamples of each class. (Actually, they are “new” in the sense that they will now be kept out of the instructional sequence and reserved for the testing sequence.)

- In designing content for teaching sequences, select widely differing examples and juxtapose these to create maximum extension.

- In designing content for teaching sequences, select the closest nonexamples possible for each critical attribute of the class, and juxtapose these to create fine discriminations.

- Depending on knowledge of the verbal sophistication of the target of the students, adjust the verbalization of the differences between examples and nonexamples and the similarity between examples and further examples to as simple a level as required.

- Reserve further examples and nonexamples for use in an expanded program should developmental testing demonstrate that the teaching is insufficient.

- In developmental testing, probe for student difficulties with varying attributes not located by subject matter experts but proving distracting to students and with distinctions proving too difficult.

- Check each expansion of the program against items reserved for the test to determine that one is still holding to the basic conceptual objective and is not “teaching the test.”

- Given a specific problem-solving process to be mastered, construct exercises that require the process to already-known phenomena and then extend to novel problems from the same domain.

- Given a set of problems to be solved (within or across domains) design sequences that require talk aloud problem solving qualities, beginning with simpler problems and building to more complex.

- Given a set of objectives and an intended population of learners, determine an appropriate level of student achievement at which developmental testing will cease. (The level reasonably may be raised or lowered based on practical experience and cost requirements during development.)

- Having determined there is sufficient time to do so, design the leanest possible draft of materials to achieve a given set of objectives and let student difficulties and queries determine the expansion at appropriate points.

- Given specific behavioral objectives, discriminate between appropriate and inappropriate content for objectives.

- Given specific behavioral objectives, generate rules for discrimination between appropriate and inappropriate content for the instructional model.

- Given a specific behavioral objective, describe examples of appropriate content.

- Given a terminal objective and intermediate objectives, sequence the intermediate objectives in an effective manner.

- Given a terminal objective and all necessary intermediate objectives, specify all the dependencies among the intermediate objectives and indicate potential branching (for adaptive sequences) before determination of student trial.

- Given a recording or transcript of student feedback, make changes in the instructional materials which retain the original objectives but reduce the likelihood of error by the next student.

- Given a set of discriminations to be learned, arrange them according to least potential for interference, if the learning ability of the students makes this possible.

- Given a set of component behaviors, determine whether a logical hierarchy is implied in sequencing these for instruction.

- Given a logical hierarchy, validate its existence by appropriate tryouts with students.

- Given a set of objectives with no predetermined sequence, select an appropriate first draft sequence when time does not permit further exploration with students.

- If time permits further exploration, determine which sequencing appeals most to students by observing their inquiry behaviors.

- Given a principle to be mastered, sequence it in the instruction in such a way that almost all elements in it have been mastered.

- Make first-draft adaptations of timing, vocabulary and sentence length, and question difficulty appropriate to medium.

- Given a specific behavioral objective and content, construct elicitors, cues, problems, etc.

- Given a specific behavioral objective, and content describe appropriate responses.

- Given the essential specifications for instructional modules (objectives, content criterion measures, elicitors, cues, problems, etc.), write effective instructional modules.

- Given summary data from the administration of an instructional module, identify ineffective frames, sequencing, stimuli, etc.

- Given a set of student entry repertoires and the amount of instructional testing time available, determine the degree of adaptation to build into the instructional sequence.

- Design specific adaptive sequences based on a content analysis in terms of the hierarchy of learning types (for example, in response to a concept’s nonexample being identified as an example, a specific branch to more instruction discriminating nonexamples of that specific type would provided).

- Given a set of items to be memorized by students, design an instructional system which will permit individualization of practice according to student need.

- Given a program sequence, arrange for the occurrence of program–extrinsic reinforcers that may be required (points, badges, praise; making the subject matter fun)

- Given a program sequence, arrange for the occurrence of program–specific reinforcers that may be required (finding the fun in the subject matter), and arrange for their occurrence.

- Select an appropriate medium, given the demands imposed by the task or content analysis in interaction with the available funds, the on-going system, and the motivational requirements.

- Given an instructional objective and a set of instructional procedures using different media, discriminate between more appropriate and less appropriate uses of media.

- With instructional outcomes specified, estimate the cost (time and resources) of applying various instructional formats or technologies.

- Distinguish between the cost factors attributable to the functions of production in, or distribution by, any particular medium.

- Distinguish between fixed and variable media costs with respect to either the production or distribution function.

- Given the cheapest medium within which the instruction might operate (e.g., paper for instance), prepare alternate suggestions for increasing the motivational impact.

- Select instructional media which optimize the benefit of all resources, i.e., cost of media, cost of instructional staff, and cost of student time committed to the instruction effort.

- Identify media by type (and combinations of types), i.e. discriminations or generalizations on the basis of three-dimensional properties, sound, motion, tactile, color, taste, or verbal description. As used here 3-D refers to all aspects of spatial and relative location. Tactile includes texture, weight or mass, and psychomotor stimuli.

- Identify learning environments by type, (and combinations of types), i.e., paper, computers, tablets, interactive white boards) on the basis of the media required by the objects or as a requirement of the project.

- Given an operationally specific objective, list the available instructional media capable of presenting the type of stimulus (or combination of stimuli) to which the student must attend, as specified by the indicator behavior and the conditions of the specific objective.

- Having listed available media for a given specific objective, consider all factors and select for the first draft trial the medium (or combination of media) which, on a best judgment basis, has a reasonable probability of conveying the instructional intent.

- During developmental testing of instruction, employ a “lean programing” rationale with respect to the mediated instruction, i.e., utilize prompting and other techniques appropriate to the media selected in order to direct attending-to behaviors to the salient aspect according to instructional intent.

- Recognize the point at which further efforts to “prompt” the initial selection of media are uneconomical during developmental testing and, at that point, shift to the “more representative” listed media along the continuum.

- Evaluate the level of “media sophistication” of the SME, basing initial media selection, in part, on this level (since one factor of cost results from the time demand required for the SME to interact with media production personnel).

- Control the understandable tendencies of graphics personnel to create “miniworks-of-art” when the limits of the instructional situation, i.e., first initial draft trial or small number of students, do not justify an unreasonable expenditure of talent and production resources.

Testing (Formative Evaluation)

A critical component to design is developmental testing, sometimes referred to as formative evaluation (Layng, Stikeleather, & Twyman, 2006; Markle, 1967). To a designer, student success resides in the sequence or learning environment design. Early designers followed the rule, “If the student errs, the program flunks.” When done correctly, the designer begins with a lean program design—that is, the minimal amount of instruction that the designer believes will result in the student reaching the objectives is provided. As developmental testing proceeds, student problems and difficulties provide the basis for design revisions. Often, this testing occurs one-on-one with the student talking aloud about their experience and what they are attempting to do. This procedure was first used in the original teaching machine project at Harvard University in the 1950s, and it is still a mainstay of good evaluation today (see Markle, 1989). The section below describes the testing requirements for effective instructional or learning environment design.

IV. Testing (Formative Evaluation) Requirements

- Given specific behavioral objectives, develop appropriate criteria measures.

- Given sample measures, classify these according to a given classification scheme.

- Given sample measurement procedures, and associated instructional objectives, discriminate between appropriate and inappropriate measurement procedures.

- Produce exemplary criterion measures for each of the types in a given classification of types of learning.

- Construct criterion measures at different levels of difficulty for a given discrimination or relational learning problem.

- Given a two-way discrimination (symmetric association) task, construct appropriate criterion measures for each direction.

- Given a production task, (such as essay writing or furniture building, etc.) develop an appropriate checklist for evaluating the product.

- Given a set of rules or principles to be mastered, determine the kinds of components involved in the rule and then devise tests for student mastery of the components.

- Analyze a given criterion measure into its component behaviors.

- Try a given set of criterion measures with appropriate students to be sure that they can be achieved by those who “know” and can’t by those who don’t.

- Given a statement of prerequisites, construct a test of these, validate that test, and then determine whether such prerequisites are rational for the general population to be served.

- Discriminate between percent correct and frequency measures of performance.

- Discriminate between stretch-to-fill and standard celeration charts (or their electronic equivalents) and their effects on the depiction of performance data.

- Given an instructional objective and the determined frequency of performance leading to fluency, use the standard celeration chart or equivalent to track performance changes.

- Determine changes in instructional programs or learning environments based on changes in performance frequency as depicted on a standard celeration chart or equivalent.

- Determine if the mix of program–specific and program–extrinsic reinforcers in the sequence will maintain student behavior through the sequence.

- Present a first draft of instructional materials to an appropriate student in a way which maximizes feedback from the student.

- Handle student errors and student criticism in a completely supportive manner, encouraging rather than discouraging student feedback.

- Probe student difficulties in a way which determines the source of the problems in the materials without giving away appropriate responses.

- Given a student or group of students going through a draft of instructional materials, observe all signs of boredom and disinterest and probe for student attitude in post-interviews.

- Given an adaptive (branching) solution, if time permits, test against a less adaptive sequences during developmental testing to ascertain effectives of the adaptive sequence.

System Management

Any design and development project requires that all the pieces fit together. A designer must be able to plan, execute, and administer the design project. The section below describes the system management requirements for effective instructional or learning environment design.

V. System Management Requirements

- Demonstrate sufficient familiarity with behavior shaping techniques such that once the Subject Mater Expert (SME) realizes what an exacting and time consuming task he/she has committed him or herself to, will persist until the appearance of the first tangible evidence of instructional improvement (usually student behavior after exposure to the first draft of improved instruction).

- Operate in a nonaversive manner, hopefully from a data-base, in order to cause the policy-makers to question basic assumptions upon which many administrative procedures are based.

- Given an instructional module and criterion measures, construct a decision system to monitor the progress of the learner through the module.

- Given a set of instructional modules described for a population of learners, construct a decision system which identifies those modules individual learners require and are prepared to take, based on diagnostic testing.

- Given data on differential student achievement of prerequisites, construct an individualized tract leading up to the main track.

- With instructional outcomes specified, estimate the cost (time and resources) of applying alternative existing instructional modules.

- Given educational prescriptions for a learner, select from within a system the correct diagnostic and achievement tests to be administrated.

- Given the results of achievement and diagnostic testing for a learner, specify the correct instructional modules and starting points for an individual learner.

- For a learner progressing through a set instructional modules, identify those points when criterion measures must be administered and select the correct criterion measures.

- When a selected criterion measure must be administered, administer the test and identify the correct decisions for subsequent instruction, based on the diagnostic system.

- Given raw data for a particular test, compute needed statistics (reliability, validity, difficulty level, required probabilities, etc.).

- Provided with raw data from diagnostic tests administered to a learner progressing through an instructional system, compute necessary statistics (probability of mastery, probability of successful completion, etc.).

- Given the results of the administration of instructional modules to a group of learners, develop more effective program–specific and program–extrinsic reinforcement schedules.

Research Evaluation

Every day, new research articles from a range of sources and disciplines are published or otherwise shared, often online. There are also various discussion groups, webinars, and conferences where information is shared. Further, there is a great deal of very relevant work that occurred over 40 or 50 years ago that is still relevant and often remains relatively obscure. For example, in 1971 a review of 235 cross disciplinary studies found a broad consensus in how concepts should be taught (Clark, 1971). The procedure described is essentially the same as the procedures we advocate today. Yet, one will find almost no use of these procedures in a typical classroom or in current teacher training textbooks. The section below describes the research evaluation requirements for effective instructional or learning environment design. There are other competencies related to behavioral research that should also be mastered (Becker, 1998; Booth, Colomb, & Williams, 2008).

VI. Research Evaluation Requirements

- Given an instructional goal or problem, identify the problem structure and match it to research on similar topics.

- Given a possible educational topic or current research related to teaching and learning, evaluate past research that exists on the topic (e.g., formative evaluation, adaptive learning).

- Distinguish between statistically significant and practically significant educational research results.

- Given a current research report, evaluate it on the basis of the thoroughness of the content analysis, identification of objectives, and clear statement of evaluation criteria.

- Given a current research report, describe alternative explanations that may account for the data or results.

- Given a current research report, determine if the research question is asking about individual or group behavior and if the appropriate design strategy was used to best answer the research question.

- Given a research question to be answered, determine if the question is asking about individual or group behavior.

- Given a research question concerning educational outcomes for individuals, construct a research design that directly controls experimental variables for each individual using the appropriate single subject design strategy.

- Given a research question concerning educational outcomes for groups, construct a research design that indirectly controls experimental variables by using the appropriate randomized trial group design strategy.

References

- Becker, H. (1998). Tricks of the trade: how to think about your research while you’re doing it. Chicago, IL: The University of Chicago Press.

- Bloom, B. S., Engelhart, M. D., Furst, E. J., Hill, W. H., & Krathwohl, D. R. (Eds.). (1956). Taxonomy of educational objectives – the classification of educational goals – handbook 1: cognitive domain. London, WI: Longmans, Green & Co. Ltd.

- Booth, W., Colomb, G., & Williams, J. (2008). The craft of research (3rd ed.). Chicago, IL: The University of Chicago Press.

- Clark, D. C. (1971). Teaching concepts in the classroom: A set of teaching prescriptions derived from experimental research. Journal of Educational Psychology Monograph, 62, 253-278.

- Hendrix, V. L., & Tiemann, P. W. (1971). Designs for designers: Instructional objectives for instructional developers. NSPI Journal, 10(6), 11-15.

- Johnson, K. R., & Layng, T. V. J. (1992). Breaking the structuralist barrier: literacy and numeracy with fluency. American Psychologist. 47, 1475-1490.

- Koszalka, T. A., Russ-Eft, D. F., & Reiser, R. (2013). Instructional designer competencies: The standards (4th ed.). Charlotte, NC: Information Age publishing.

- Krathwohl, D. R. (2002). A revision of Bloom’s taxonomy: An overview [Electronic Version]. Theory into Practice, 41 (4), 212-218.

- Layng, T. V. J. (2007, August). Theory of mind and complex verbal relations. Invited workshop presented at the National Autism Conference, Pennsylvania State University. University Park, PA.

- Layng, T. V. J., Stikeleather, G., & Twyman, J. S. (2006). Scientific formative evaluation: The role of individual learners in generating and predicting successful educational outcomes In: Subotnik, R., & Walberg, H. (Eds.), The Scientific Basis of Educational Productivity, Charlotte, NC: Information Age Publishing.

- Layng, T. V. J., & Twyman, J. S. (2013). Education + technology + innovation = learning? Handbook on Innovations in Learning, Philadelphia, PA: Center for Innovation in Learning.

- Leon, M., Layng, T. V. J., & Sota, M. (2011). Thinking through text comprehension III: The programing of verbal and investigative repertoires. The Behavior Analyst Today, 12, 21-32.

- Markle, S. M. (1967). Empirical testing of programs. In P. C. Lange (Ed.), Programmed instruction: Sixty-sixth yearbook of the National Society for the Study of Education: 2 (pp. 104-138). Chicago, IL: University of Chicago Press.

- Markle, S. M. (1989). The ancient history of formative evaluation. Performance and Instruction, August, 27-29.

- Markle, S. M. (1990). Designs for instructional designers. Seattle, WA: Morningside Press.

- Markle, S. M., & Tiemann, P. W. (1967). Programing is a process: Slide/tape interactive program. Chicago, IL: Tiemann & Associates.

- Robbins, J. K. (2011). Problem solving, reasoning, and analytical thinking in a classroom environment. The Behavior Analyst Today, 12, 42-50.

- Skinner, B. F. (1957). Verbal behavior. Englewood Cliffs, NJ: Prentice-Hall.

- Sota, M., Leon, M., & Layng, T. V. J. (2011). Thinking through text comprehension II: Analysis of verbal and investigative repertoires, The Behavior Analyst Today, 12, 11-20.

- Tiemann, P. W., & Markle, S. M. (1991). Analyzing instructional content. Seattle, WA: Morningside Press.

- Twyman, J. S., Layng, T. V. J., Stikeleather, G. & Hobbins, K. (2004). A Non-linear approach to curriculum design: The role of behavior analysis in building an effective reading program. In: W. L. Heward, T. E. Heron, N. A. Neef, S. M. Peterson, D. M. Sainato, G. Y. Cartledge, et al. (Eds.), Focus on behavior analysis in education: Achievements, challenges, and opportunities (pp. 55-68). Upper Saddle River, NJ: Pearson.

- Welch, W., Hendrix, V., Johnson, P., & Terwilliger, J. (1970). Conceptual papers defining the knowledge and skills required to function as educational developers and evaluators. Design Document II for the Midwest Educational Training Center. Minneapolis, MN: Upper Midwest Regional Educational Laboratory

Inicia la discusión (0)

Parece que no hay comentarios en esta entrada. ¿Porqué no agregas uno e inicias la discusión?

Trackbacks y Pingbacks (0)

Abajo hay un recuento de los trackbacks y pingbacks relacionados con este artículo. Estos se refieren a los sitios que hacen mención o referencia de esta entrada.